So Do We Allow AI-Generated Content to Pervade in Creative Space?

I noted this topic down as a potential draft a few days ago as I had noticed a blog within Blaugust using AI to write all of their posts. Obviously, I am not the authority on what people can and can’t do, but with Belghast’s post going live yesterday, I felt it was time to discuss. Which brings us to a question of ethics: Should AI-generated content belong in the Blaugust community? Spoiler warning, my answer is a no. But let me explain further, with shock and gasp nuance.

In Bel’s post, he basically puts forward the same question, asking us how we feel about the whole thing. While I have responded to the question through Discord privately, I still wanted to write this post to make my stance on AI and Large Language Model generated content clearly known. I feel this is important, as it’s a hot topic, particularly in technology. To be honest, as it has become so ubiquitous over the past few years, I am surprised it has taken us this long for it to be an issue at all! But it is, and we’ve got to talk about it.

What is a Large Language Model?

Now, to be super clear, I am not a techy person. I have some basic grasp on AI, what it should be, what it currently is, but beyond that… white noise. So I will do my best to summarise how I am personally able, but I thoroughly recommend checking out Kelly Adams post from the other day as he explains a lot of what I want to say way better than I can. It’s a fantastic read. As he says:

“So what is ‘real’ artificial intelligence? More modern definitions usually involve something that humans can do that computers are traditionally not very good at: ‘understanding’ natural language (the way we speak) or visual input (images) are examples of modern AI.

The state of the art for AI systems today start with vast collections of billions of examples of whatever it is you want the AI system to ‘understand’. These collections are then processed to generate complex statistical probability models.”

So in essence, what we expect Artificial Intelligence to be, is intelligent. Able to replicate human thought processes, with the power of a machine-like intellect, but the ability to understand the things they create. At present, it’s all mathematics. Each individual AI has been trained on data available throughout the world wide web, and beyond in some cases, and can then pull together what seemingly fits the best determined by their coding. It’s not a true intellect, it’s purely generative. Perhaps then we should be calling it something different, however, we know it as AI now and hell… I don’t know!

A Large Language Model, then, is the above but instead of producing images, it produces words. Something like Chat GPT, right? It pulls together articles, blog posts, fan-fiction, any written word that’s publicly available, or fed to it, and can replicate the written word as trained on all of these things. According to TechRadar, “ChatGPT’s most original GPT-3.5 model was trained on 570GB of text data from the internet, which OpenAI says included books, articles, websites, and even social media.” And this isn’t the only LLM out there. Other notable ones are Microsoft’s Copilot (which just loves to turned itself on in Windows 11…), Google Gemini, Twitter’s Grok AI, and yeah. Loads of apps have their own. In Notion you can ask their AI to write something for you. It’s becoming completely inescapable.

Uncomfortable Facts

That leads me very nicely into the uncomfortable facts about AI. Those of us who use social media, write, whether for a job or ourselves, are likely to have had our words given to these tools. The same as how visual artists work has been fed to the machine. Our passions, our work, our creativity, our lives are now a part of a thoughtless entity that just spits out something that is statistically relevant to a given prompt. It may not sound like us, but we’re in there, like it or not. And there are huge concerns around copyright and infringement when it comes to AI-generation. Perhaps it’s not copyright, perhaps it is, but it still feels so inherently wrong.

I think Magi summed this up really well in his post when he said that using AI to generate an image “requires you to literally search for “cat holding an umbrella” so that the program can vomit some amalgamation of various artists’ hard work and efforts onto your screen so that you can use it in whatever capacity you wanna use it in.” That’s really it, that’s the kicker. Generative AI cannot really be considered transformative (Magi goes into this more in his post). This is not Andy Warhol colourising repeating images of Marilyn Monroe, or somebody making a beautiful collage from old magazines, this is a piece of technology taking people’s art (usually without permission) and splicing them together. Yes, it makes something new but… does it? Surely an AI-generator cannot be “transformative” because they do not have the intelligence to effectively do so knowingly, they are simply reaching towards the typed in prompt. Utilising somebody else’s style and art.

In essence, the generation process is effortless. Many do not consider the drawbacks of this, only viewing how their work can be streamlined utilising AI and LLMs. But in making this technology so widely and easily available, it forms laziness, which isn’t a word I like to use generally, but a kid can get an LLM to write their essay for them and it’s getting tougher and tougher for the frankly underpaid teachers to recognise when work has been produced by one of these systems. Isn’t that terrifying? Sure, AI can be a great aid for students, but using it simply to do their work for them? Gosh I dread what this can mean going forwards.

And back on the topic of art. If a human is not directly involved in the creation process, there is no creation. Only generation. I’m not going to say that this isn’t art, I’m sure there are instances with all sorts of nuance where it can be. But to get very general, art is “a visual object or experience consciously created through an expression of skill or imagination.” (Britannica.com) AI and LLMs are not capable of this. AI “art” is just soulless images. LLM generated writing is simply generic text, often riddled with misinformation.

Not to mention just how bad some of what AI produces is. Wilhelm’s post on the topic covers this better than I can at this point:

“This is AI. A chat bot that gives you the answers it mathematically believes you want to hear without validation of their correctness. There is an old saying about not trusting somebody to tell you the weather or if the sun rose in the east, and it applies in spades to AI. If the model somehow decides that the sun rises in the west and that it is warm and sunny despite the snowstorm currently raging, it will tell you that.

Meanwhile, every AI image that is kind of cool… the ones that get the right number of hands on people, the right number of fingers on hands, and little details like that… because, again, no intelligence here, just probability that in a scene like this a finger or arm in a particular place has a higher mathematical likelihood than not… and gets shared around is built on a huge pile of bad images that make no sense at all, that wouldn’t fool anybody into thinking an actual person did it.”

Did you know that people use LLMs to “inform decisions” on things such as “health evaluation and financial counsel”? (Forbes) And these systems can be very accurate, they can produce fantastic information. But they are not foolproof. People are picking up political information from ChatGPT and doing no further research. It genuinely alarms me that the human population is so willing to let LLMs dictate major life decisions, to trust them with things that affect their health, their livelihoods, how their country might be run… I’m flabbergasted. And that’s not to mention the issue with deepfakes and revenge porn! Which has had some protections put into place in law, but each country’s laws differ, and not everybody follows them.

And of course, something that us bloggers are very familiar with: spam. AI has allowed spammers and scammers to flourish. They can type in a prompt, and the LLM can translate the language and word a comment to sound like a genuine response only to drop a link taking you to who knows where. I’ve seen this in my comments, my emails, my streams, Discord… it’s everywhere, and it’s exhausting. At least for the most part, knowing that these things are issues helps you to be able to navigate the shark-infested waters and remain safe, but as AI continues to evolve… how long for?

Benefits of the Model

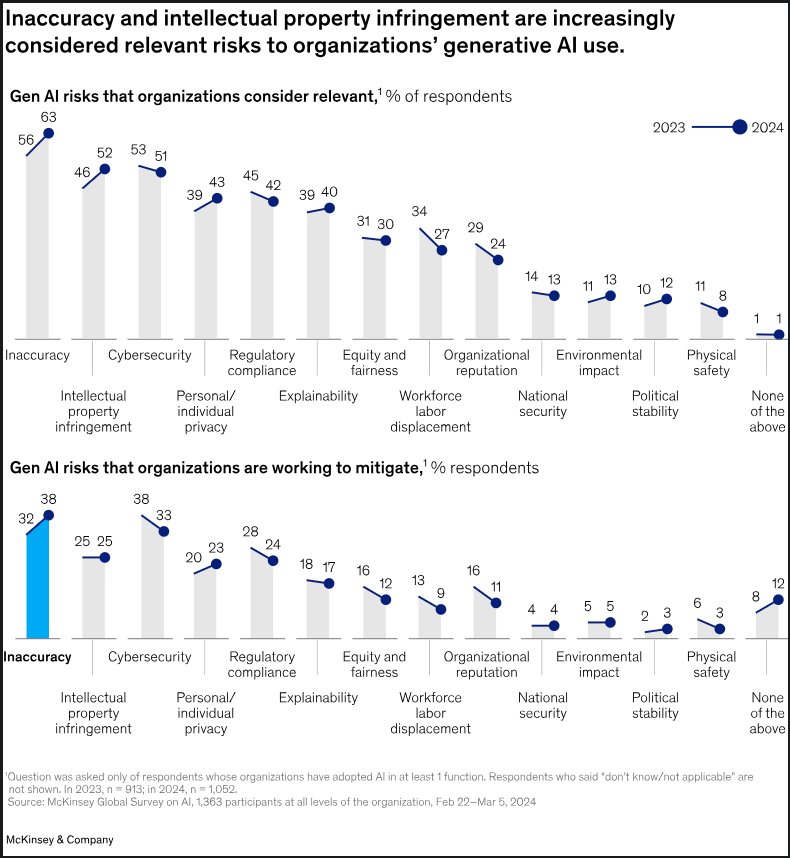

All this to say, there are benefits to AI. I’m honestly not too savvy on the good it can do and find it a little overwhelming, but I have definitely heard that a lot of coders utilise AI to great effect. There’s talk of it helping in the medical field (this is a fantastic rundown of the benefits and downsides of AI in the workplace, by the way). And as mentioned in the McKinsey article, more and more organisations are using AI to aid with workflow. This can be a huge boon to an overworked population, and if we can get the technology to a point where the information is trustworthy, oh the good it could do!

Personally, I find LLMs in particular super helpful. Yes, I know I’ve covered how they are dangerous and stealing our art, I agree that they’re fishy, to be polite about it. However, as a disabled woman with heavy fatigue and regular brain fog (and oh boy, this post sure triggered that today), I find it more and more common that I’m blocked from doing something I need to do because my brain just isn’t working right. As an example, I am a Game Master of TTRPGs (usually Dungeons & Dragons and things of that ilk) and I love creating campaigns and one-shots and sessions, but the preparation and work that goes into it is sometimes a huge hurdle. And opening up ChatGPT, and asking it for some ideas for NPCs, or perhaps I need a specifically themed puzzle for a dungeon (I’m awful at puzzle creation but my players love them). I can ask ChatGPT to offer some ideas, and then I can use those to create something that fits my style, my game, and my players. This can save me a lot of frustration and spoons, but I’m not simply asking the LLM to do the work for me, merely getting a jumping off point. And this is the kind of thing they are great for. Getting ideas, and running with them. You could even ask for some relevant blog post ideas, if you found yourself struggling! Tipa of Chasing Dings believes that using AI to write for you is perfectly okay, and their post on the subject is an interesting take that is also very important to the conversation!

So Should We Allow AI-Generated Content to Pervade in Creative Space?

Here’s the thing. As AI currently stands, I personally feel that the negatives vastly outweigh the positives. That’s not to say that I think nobody should use it, clearly there is some good to be found. And while I don’t believe using AI-generated art or writing has a place in blogging, if it’s purely illustrative, it’s not my place to say what you can and can’t do on your personal blog. I actually used it very briefly in my post yesterday to outline what I wanted the ghost to say, to be frank.

Are we, as creatives, afraid of being replaced? Eh… No, not really, what the technology produces is soulless and bland. Paeroka’s experiment with WordPress’ AI Assistant is a great example of this. While it can imitate human creativity reasonably well, the inspiration and personality that goes into a piece of creative work cannot be replicated. Reading something a human has thought about and taken the time to write versus whatever a LLM can spit out is going to be more interesting to read and let’s be honest, factual. It doesn’t matter if the technology can do it, I don’t feel like we should be using it this way. It feels like a huge kick in the teeth to anybody who’s ever written content online for a website. But… the fact that these LLMs are trained on our words without our consent and able to be used to “write” blog posts… I do not believe we should allow LLM-generated content within Blaugust. Not how the technology currently stands, at least. That is, of course, aside from what I mentioned under “Benefits”, and I will not judge people individually for generating the odd image or a snippet of text, using it as a tool to help where they might be stuck, playing around with it, or even other things that I may not have thought of. This post was a lot, folks, I definitely missed things.

Perhaps one day the technology will be good enough to replace the human element. I truly fear for that day and really hope that we outline what is and isn’t okay useage for these models. And meanwhile, we have to understand what this technology is, where it came from, what it is doing out there, and the fact that it is going to draw ire from creatives.

There is potentially some light, however. Avaruussuo wrote a fascinating post covering their thoughts on using AI software creatively. I love the concept of using AI to create a text adventure! It may not have gone well for them the first time around who, who knows what the future holds? As more of these systems crop up, learning from each other, perhaps this content becomes more of a collage of things past. My friend actually linked me to a piece that looks at what is “in the works to detect and stop this theft” (to quote her). It’s certainly not all doom and gloom, at the very least!

Oh, and by the way? As AI is becoming more and more of a thing, we’re also seeing a rise in books and movies on the subject. There are two movies I thoroughly recommend on the subject of artificial intelligence and exactly what that could look like: Her and The Artifice Girl. Very different to the likes of the Terminator, that’s for sure.

Huge thanks to Rowhina for providing so many incredible resources on this topic. 💞

6 thoughts on “So Do We Allow AI-Generated Content to Pervade in Creative Space?”

Well, I think my take is a little more nuanced after seeing the AI news blog that sparked the original discussion. That blog appears to be taking other publication’s news items, having an LLM rewrite them, and then post them. That might be crossing a line.

If, for example, you told an LLM what points you wanted to get across and other information and out of it came this post I just read — no, I don’t have a problem with that. You were involved in the process. LLMs don’t randomly decide to produce content; at every stage, they are asked by.a human at some point to do so.

Do LLMs massively infringe on copyright? If I took your blog post and summarized it for someone, I wouldn’t be infringing your copyright. If I took that summary, posted it on my blog and called it mine, well, it’d be a mean thing to do, but still not sure it’s infringing. Magi would argue that I had just made your content public domain against your will, but I’m not sure that’s a correct either.

As you said, this is a complicated issue. I do look at the intentions of the LLM user to determine whether I feel their use was permissible or not, I guess.

Well to be fair I don’t have copyright to infringe on but if somebody took my post and ask an LLM to reword it, I would still consider it plagiarism as the person is using my ideas and without credit, right? 🙂

But yes, there’s a lot to it all. I barely skimmed the surface.

Very well written, Jaedia: lots of good thinking and references!

I suspect we will be debating when and where to use the current form of AI for quite some time to come. I don’t think the answers are necessarily obvious. Thinking about it and debating it is the right thing to do.

Thank you. 😊

For sure, especially as it evolves and more legislation comes into place and we learn more and figure things out. There’s SO MUCH grey area here that we just don’t have clear answers for and it’s difficult!

Honestly I was wondering why there were so many “AI” centric posts on the discord…

Yeeeah… it was prompted, and then we all bounced off each other for a discussion, as we are wont to do! (Something I genuinely love about the blogging community on the whole)

Comments are closed.